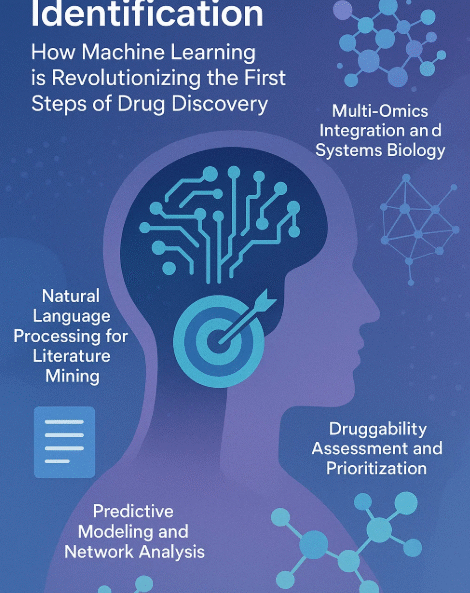

AI-Powered Target Identification: How Machine Learning is Revolutionizing the First Steps of Drug Discovery

Introduction

The pharmaceutical industry stands at a pivotal moment. After decades of relying on serendipity and laborious experimental methods, the field of drug discovery is experiencing a profound transformation driven by artificial intelligence (AI) and machine learning (ML). At the heart of this revolution lies target identification—the critical first step that determines whether a drug development program will succeed or fail. Traditional target identification has been plagued by high failure rates, with only 10% of candidates entering clinical trials ultimately reaching approval, often due to poorly validated targets. Today, AI-powered approaches are compressing discovery timelines from years to weeks while unlocking novel therapeutic avenues previously hidden in vast biological datasets. However, the success of these sophisticated algorithms depends entirely on one crucial factor: the quality of the data they consume. This is where expert data curation becomes the unsung hero of AI-driven drug discovery, and where companies like Saturo Global Research Services are making the difference between algorithmic promise and real-world breakthrough.The Limitations of Traditional Target Discovery

For decades, target identification has relied on hypothesis-driven research, painstaking literature reviews, and resource-intensive experimental validation. Researchers would spend months or years analyzing gene knockout studies, conducting biochemical assays, and manually sifting through scientific publications to identify potential therapeutic targets. This traditional approach faces several critical challenges:Data Complexity and Scale:

Modern biological research generates terabytes of heterogeneous data across multiple domains—genomics, proteomics, transcriptomics, metabolomics, and clinical information. Human researchers can only process a fraction of this information, leading to missed opportunities and overlooked connections.Time and Resource Constraints:

Validating a single target through conventional methods can take years, delaying potentially life-saving treatments for patients while consuming significant financial resources.Bias and Inconsistency:

Legacy datasets often lack standardization, contain mislabeled samples, and reflect historical research biases. This fragmented landscape makes it difficult to draw reliable conclusions about target-disease relationships.Limited Pattern Recognition:

Human analysis, while expert-driven, cannot detect the subtle correlations and complex multi-dimensional patterns that exist within massive biological datasets.AI’s Transformative Role in Target Identification

Machine learning algorithms excel at analyzing vast, complex datasets to uncover hidden patterns and predict high-value targets with therapeutic potential. The AI revolution in target identification encompasses several key technological advances:Multi-Omics Integration and Systems Biology

Modern ML models can synthesize genomic, transcriptomic, proteomic, and metabolomic data to map comprehensive disease pathways. By integrating these diverse data types, AI systems can identify targets that play critical roles in disease mechanisms while considering the full biological context.Predictive Modeling and Network Analysis

Advanced algorithms can construct and analyze intricate biological networks, identifying key “hub” proteins or genes that, if modulated, could have significant therapeutic effects. Tools like deep learning models trained on chromatin imaging data can infer gene regulatory networks, accelerating target validation by linking physical DNA organization to biochemical activity.Natural Language Processing for Literature Mining

The wealth of knowledge locked within millions of scientific publications and patents represents an enormous, largely untapped resource. NLP algorithms can rapidly scan, interpret, and extract relevant information about potential targets, disease associations, and experimental evidence, transforming literature review from a months-long process to a matter of days.Generative AI and Novel Target Discovery

Large language models (LLMs) can mine scientific literature and patent databases to propose entirely novel targets, reducing reliance on serendipity and conventional thinking. These systems can identify unconventional therapeutic hypotheses that might never have occurred to human researchers.Druggability Assessment and Prioritization

Once potential targets are identified, ML models can predict their “druggability”—the likelihood that they can be effectively modulated by drug-like molecules. This helps prioritize targets that are not only biologically relevant but also pharmacologically accessible. Real-world success stories are already emerging. AI-driven platforms have identified promising targets for cancers and neurodegenerative diseases, with several candidates now entering clinical trials, demonstrating the practical impact of these technologies.The Critical Foundation: Data Quality and Expert Curation

While AI models capture headlines with their impressive capabilities, their performance fundamentally depends on the quality, structure, and integrity of the underlying data. The pharmaceutical industry’s embrace of the “garbage in, garbage out” principle has never been more relevant.The Data Quality Challenge

Poor data quality manifests in several ways that can derail even the most sophisticated algorithms:Noise and Errors:

Public datasets like TCGA (The Cancer Genome Atlas) or GEO (Gene Expression Omnibus) often contain mislabeled samples, inconsistent metadata, and experimental artifacts that require meticulous cleaning and validation.Bias Amplification:

Unrepresentative or historically biased datasets can lead to skewed predictions that disadvantage underrepresented populations or overlook important biological subtleties.Integration Hurdles:

Combining structured data (clinical trials, databases) with unstructured information (research papers, patents) demands both technical expertise and deep domain knowledge.Standardization Issues:

Different databases use varying nomenclatures, ontologies, and formatting standards, creating barriers to effective data integration and model training.The Curation Imperative

Expert data curation transforms raw, heterogeneous datasets into AI-ready resources that enable reliable predictions and discoveries. This process involves multiple critical steps:- Data Harmonization: Unifying metadata, resolving labeling conflicts, and standardizing annotations across diverse sources

- Quality Validation: Cross-referencing against primary sources and applying rigorous quality control measures

- Bias Mitigation: Ensuring balanced representation and applying fairness-aware algorithms during dataset preparation

- Contextual Enrichment: Adding biological context through expert annotation and mapping to established ontologies and pathways

Saturo Global: Pioneering AI-Ready Data Solutions for Drug Discovery

Saturo Global Research Services stands at the forefront of the data curation revolution, empowering pharmaceutical and biotechnology companies to fully leverage AI’s transformative potential. With a team of over 200 domain experts, data scientists, and bioinformaticians, Saturo Global bridges the critical gap between raw biological data and actionable AI-driven insights.Comprehensive Data Curation and Integration

Saturo Global systematically aggregates data from scientific literature, public databases, clinical trial repositories, patent databases, and proprietary sources. This comprehensive approach ensures that no relevant information is overlooked while maintaining the highest standards of data quality and consistency. The company’s proprietary curation framework includes:- Manual Expert Curation: PhD-level scientists and domain experts meticulously unify metadata, resolve labeling conflicts, and validate data against primary sources

- Automated Quality Assurance: Sophisticated algorithms detect and flag inconsistencies, duplicates, and potential errors

- Continuous Updates: Real-time monitoring and updating of datasets to reflect the latest scientific discoveries and regulatory changes

AI-Optimized Data Products

Saturo Global delivers structured data products specifically designed for machine learning applications:Curated Datasets for ML Training:

Enriched with unified annotations, gene-disease associations, and pathway mappings that enable robust model developmentKnowledge Graph Construction:

Custom knowledge graphs that represent complex relationships between genes, proteins, diseases, drugs, and other relevant entities, providing a powerful foundation for AI-driven hypothesis generationPatent Analytics:

AI-driven intellectual property insights that help identify white-space opportunities while avoiding litigation risksCustom Integration Pipelines:

Tailored workflows that seamlessly integrate omics data, clinical records, and real-world evidence into predictive modelsProven Impact and Results

The real-world impact of Saturo Global’s approach is demonstrated through client success stories. For example, a top-10 pharmaceutical company reduced target validation timelines by 40% using Saturo’s curated genomic datasets and AI-driven prioritization tools. This acceleration translates directly into faster time-to-market for potentially life-saving therapies. Organizations leveraging Saturo Global’s curated datasets for ML-powered target discovery consistently report:- 50-70% reduction in lead identification time

- Enhanced novel target discovery rates

- Improved reproducibility and model explainability

- Greater confidence in advancing targets to validation and screening phases

Scalable Solutions for Diverse Needs

Recognizing that each drug discovery project is unique, Saturo Global offers customized solutions tailored to specific research requirements:- Therapeutic Area Specialization: Custom knowledgebases focused on specific disease areas such as oncology, neurology, or immunology

- Collaborative Research Support: Secure data-sharing platforms that facilitate multi-institutional drug discovery partnerships

- Regulatory Compliance: Data governance frameworks that ensure compliance with evolving regulatory requirements

1 Comment

Just_A_Reader

This is exactly what I needed to read today.